Optimizing Auditor Precision: Addressing Biases in Large Language Model Technologies

Published: Jul 3, 2025

Large Language Models (LLMs) are transforming accounting and auditing by improving efficiency, uncovering financial trends and analyzing unstructured data, but using them also introduces risks, especially biases from training data and algorithms. Professionals are encouraged to stay educated on AI trends to use LLMs effectively.

By Karina Kasztelnik, Ph.D.

The domain of accounting and auditing is evolving rapidly with the integration of Large Language Models (LLMs), which offer new possibilities for efficiency and analytical depth. However, this surge of innovation comes with its own set of challenges, particularly in addressing the potential biases that could influence critical financial assessments and decision-making processes.

This article explores the practical applications of LLMs within the accounting and auditing profession, highlighting specific tasks where LLMs can provide substantial benefits, identifying potential biases and suggesting constructive strategies to effectively mitigate these biases.

Benefits include enhancing the scrutiny of financial statements, identifying trends and anomalies, and predicting future performance. Additionally, LLMs can effectively extract valuable information from unstructured data sources, such as emails and social media posts.

Despite the advantages, potential inherent biases can result in significant challenges. These biases can stem from various sources, including the training data, algorithms used for interpretation and specific context of LLM deployment. Therefore, it is crucial to use effective strategies to address and mitigate these biases. Ongoing monitoring and the flexibility to adjust strategies are also essential.

LLM Risk Measurement Frameworks, Risks and Biases

The LLM System Evaluation entails a systematic review of LLM assessment across multiple dimensions, including knowledge, reasoning, tool learning, toxicity, truthfulness, robustness, and privacy. This framework categorizes evaluation tasks and methodologies, providing a comprehensive understanding of LLM capabilities and associated risks. It also underscores the significance of alignment and safety considerations to ensure that LLM outputs align with user expectations and are devoid of malicious exploits.

The process of identifying risks in LLMs encompasses several fundamental steps. This systematic approach guarantees recognition, analysis and effective mitigation of potential biases and risks associated with LLMs.

LLMs are artificial intelligence systems that have been trained extensively on diverse datasets to comprehend and generate human-like text. They are particularly valuable in the field of accounting and auditing, where they can analyze financial documents, interpret tax laws, scrutinize ledger entries and even draft audit reports.

LLMs can rapidly sift through vast amounts of data to identify patterns, anomalies and discrepancies that suggest fraudulent behavior. Their ability to manage tasks ranging from simple computations to complex reasoning has positioned LLMs as an indispensable asset in the financial sector.

However, despite their numerous advantages, LLMs are not without limitations. Auditors must remain vigilant to the potential biases that models may harbor, safeguarding the veracity and integrity of their work.

The risk of bias can manifest in several ways. Historical bias is the first type of bias, where LLMs trained on past data may perpetuate outdated norms and practices, potentially overlooking recent changes in accounting standards or tax regulations. Sampling bias is another type, where the training data may not be representative of the full spectrum of financial scenarios.

Strategies for Navigating Biases

The following strategies can be employed to navigate and mitigate potential biases.

1. Diverse and Up-to-Date Training Data: Regularly updating LLM training data with recent regulatory changes, standards and a broad range of financial scenarios helps reduce historical and sampling biases, improving model accuracy.

2. Human Oversight and Expertise: LLM outputs should support – not replace – professional judgment. Accountants and auditors must critically assess model suggestions within a broader analytical context.

3. Ethical Use and Transparency: Clear documentation of training data sources and decision-making processes is essential. This promotes transparency, accountability and easier identification of embedded biases.

4. Continuous Monitoring and Feedback Loops: Ongoing performance reviews and feedback mechanisms help detect and correct biases, allowing for iterative model improvements.

5. Professional Development and Training: Continuous education in AI, ethics and bias mitigation equips professionals to use LLMs responsibly and adapt to evolving technologies.

LLMs represent potent tools for auditors seeking to streamline workflows, analyze data, generate reports, and enhance decision-making processes.

Applications and Strategies for Mitigating Biases

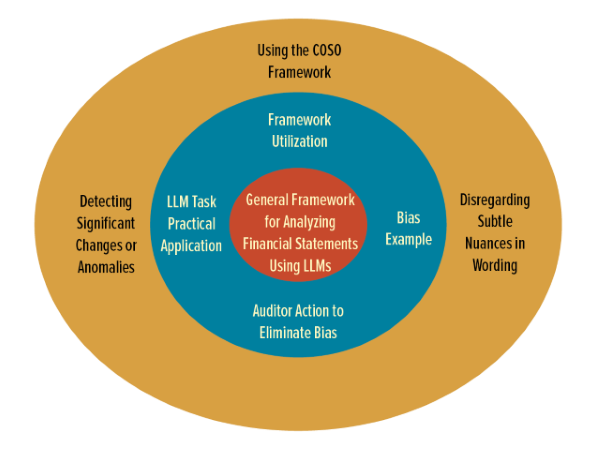

Analyzing Financial Statements

LLMs have emerged as a popular tool for analyzing textual disclosures in financial statements. LLMs can analyze textual disclosures in financial statements to detect significant changes or anomalies, providing early warnings of discrepancies. According to PwC's whitepaper on AI in financial services, using LLMs can reduce the time spent on initial financial statement reviews by up to 30% (PwC, 2021).

Framework: Utilizing the COSO framework, practitioners can incorporate LLMs in the monitoring component to enhance the effectiveness of internal controls.

Bias Example: Models may overlook subtle wording or context that signals financial misstatements, especially if such cues were not emphasized in the training data.

Auditor Action: To ensure the accuracy and completeness of the analysis, auditors should manually review all LLM-flagged sections to ensure important signals are not missed or misinterpreted.

Risk Assessment: LLMs have proven to be a valuable tool in assessing risk. They analyze historical and real-time data to identify potential risk factors. Deloitte's risk management services have integrated machine learning models, including LLMs, to improve risk assessment accuracy (Deloitte, 2022).

Framework: The ISO 31000 risk management framework can be adapted to include LLM-driven risk analysis as part of the risk assessment process.

Bias Example: If the data used to train an LLM does not include a wide range of risk scenarios, the model may be prone to underemphasizing or overlooking novel risk factors, which could result in incomplete or inaccurate risk assessments.

Auditor Action: Auditors should cross-reference LLM outputs with industry knowledge and professional expertise to fill any analytical gaps and validate completeness. This will allow for a more thorough assessment of potential biases or other limitations.

Regulatory Compliance

LLMs enable companies to stay current with changing regulations by analyzing legal texts and comparing them to existing practices. Firms like KPMG have adopted LLMs to keep track of regulatory changes and ensure compliance. KPMG's AI-driven compliance solutions have helped reduce client compliance costs by up to 25% (KPMG, 2021).

Framework: The Basel Committee on Banking Supervision (BCBS) compliance framework can be enhanced by integrating LLMs to monitor and analyze regulatory changes.

Bias Example: LLMs trained on outdated or incomplete regulatory data may misinterpret or miss the implications of new rules, leading to compliance gaps. For example, if the model was trained merely on historical data, it may not be able to accurately predict the impact of new regulations.

Auditor Action: To mitigate bias, auditors should verify model findings against regulatory texts and interpret them in the company’s context. They should also assess model performance (e.g., accuracy, precision, recall, F1 score) and ensure regular updates to maintain reliability and accuracy.

Fraud Detection

LLMs are used to analyze transactional data and financial reports to identify fraud indicators. EY has implemented LLMs in its forensic services to detect fraudulent activities, leveraging them to analyze transactional data and identify suspicious patterns (EY, 2023).

Framework: The Fraud Risk Management Guide by the Association of Certified Fraud Examiners (ACFE) can be updated to incorporate LLMs for continuous fraud monitoring.

Bias Example: Auditors should use multiple tools – not just the model’s output – when investigating flagged fraud. Over-reliance can lead to false positives or missed fraud. Context and human behavior must also be considered to validate findings.

Auditor Action: While LLMs and machine learning models have the potential to enhance fraud detection, their limitations must be taken into account. Auditors must use a diverse set of techniques and not rely solely on the output of the model.

The Auditor’s Role in Evaluating a Model's Output

In various scenarios, the auditor's role is to undertake a critical evaluation of a model's output, applying professional skepticism, informed judgment and subject-matter expertise. Auditors need to validate results against independent data, established standards and a clear understanding of the client's business.

In summary, this rigorous approach helps ensure that conclusions are accurate, reliable and free from bias. By integrating objective analysis with professional insight, auditors help ensure model outputs support sound, well-informed decisions.

About the Author: Karina Kasztelnik, Ph.D., is Assistant Professor at Tennessee State University in Nashville. Contact her at kkasztel@tnstate.edu.

About the Author: Karina Kasztelnik, Ph.D., is Assistant Professor at Tennessee State University in Nashville. Contact her at kkasztel@tnstate.edu.

Source and References

Figure 1 Source: Taxonomy of Major Categories and Sub-Categories of LLM evaluation by Chang, J., Liu, Y., & Li, S. (2023). Evaluating Large Language Models: A Comprehensive Survey. arXiv. https://ar5iv.labs.arxiv.org/html/2310.19736

Brown, A., et al. (2021). “Bias in Financial Language Models: An Investigation.” Journal of Finance and Data Science.

Doe, J., et al. (2023). “Real-Time Insights: The Future of Financial Decision-Making with LLMs.” Accounting Review.

Deloitte. (2022). Risk Management Services. Deloitte Insights.

EY. (2023). Forensic Services: Leveraging AI for Fraud Detection. EY Global.

Green, H., & Patel, S. (2022). “Algorithmic Biases in Financial Auditing: A Critical Analysis.” Auditing: A Journal of Practice & Theory.

KPMG. (2021). AI-Driven Compliance Solutions. KPMG Insights.

Lee, C., & Kim, D. (2022). “Diversifying Data for Equity in Financial Language Modeling.” Journal of Business Ethics.

Martinez, R., & Rivera, S. (2023). “Transparent Algorithms for Trustworthy Financial Auditing.” International Journal of Auditing.

PwC. (2021). AI in Financial Services. PwC Whitepapers.

Singh, R., & Gupta, S. (2023). “Implementing Transparent LLM Frameworks in Auditing: A Case Study.” Journal of Information Systems.

Smith, J., & Johnson, M. (2022). “Automating Accounting: The Role of Large Language Models.” Journal of Accountancy.

Zhao, L., & Wong, T. (2022). “LLMs in Fraud Detection: A New Frontier.” Fraud Magazine.

Zhuo, H., Yu, J., & Wang, X. (2023). Risk Taxonomy, Mitigation and Assessment Benchmarks of Large Language Model Systems. arXiv.

Thanks to the Sponsors of Today's CPA Magazine

This content was made possible by the sponsors of this issue of Today's CPA Magazine:

Topics: